https://blog.carlesmateo.com

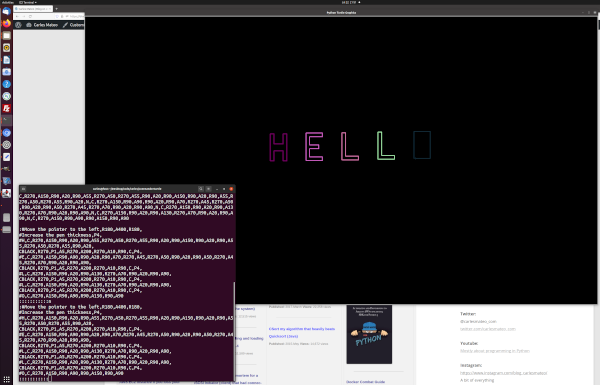

https://blog.carlesmateo.com/commander-turtle

A game about programming and drawing in English and in Catalan languages for children

It is inspired by the old Logo.

It aims to be a way for the children to learn to code easily and in a funny way.

And allowing them to share and replay their creations by sharing the code.

- My books in English mostly about Python: https://blog.carlesmateo.com/my-books/

- My author page at LeanPub: https://leanpub.com/u/carlesmateo

- Information about my programming classes: https://blog.carlesmateo.com/classes-and-mentor/

- My Youtube engineering channel: https://www.youtube.com/channel/UCYzY-2wJ9W_ooR64-QzEdJg

- My Twitch engineering channel: https://www.twitch.tv/carlesmateo_com

- My twitter: https://twitter.com/carlesmateo_com

- My LinkedIn: https://www.linkedin.com/in/carlesmateo

- My Open Source projects:

- cmemgzip - Compress files into memory when there is no free disk space

- cmips

- carleslibs - Python libraries to quickly build CLI applications

- ctop.py - Monitor the infrastructure

- MT Notation for Python